Interview by Christopher Michael

dmstfctn (pronounced demystification) are a UK-based artist duo whose work investigates the complex systems which make up the contemporary world. To date, their collaborative practice has explored such topics as offshore financing, machine learning, and network effects. Their work attempts to make intricate networks comprehensible and accessible to large audiences to create an understanding of the technological processes that affect our everyday lives. Essentially, they seek to demystify the technologies that make up our world.

Recently, dmstfctn was awarded this year’s edition of the Edigma Award, the SEMIBREVE annual award to celebrate and promote the creation of works that explore interactivity, sound, and image supported through the use of digital technologies, for GOD MODE (ep 1.), an interactive audiovisual performance telling the fictional story of a frustrated AI training to recognise objects and eventually find a cheat to escape its simulated training environment.

Set in the space of a supermarket, it demonstrates to audiences the modes by which machine learning is being implemented in everyday spaces. It calls to question language, perception, and perspective ideas within AI tools. With the rise of systems like Amazon’s cashierless supermarkets, dmstfctn seek to pull back the curtain on how machine learning processes function in and help form contemporary environments.

GOD MODE (ep 1.) is a multifaceted live performance piece. The piece runs on a game engine and utilises a game controller to navigate the fictional space while bringing the AI character to life via facial motion capture and voice modulation. Audiences can interact with the virtual space and help the AI by pressing buttons on their phones, which remotely sync to the simulation. To add to the experience, the artist HERO IMAGE performs a live soundtrack for the piece’s duration. While the piece has toured and shown at UNSOUND, Impakt, and Serpentine (where the artists worked with the Arts Technologies Programme on the game’s development), at SEMIBREVE festival 2023 will be the first time it is presented as a standalone installation.

Like most of dmstfctn’s previous work, GOD MODE (ep. 1) was born out of direct engagement with the technologies and systems they examine. Following Alan Blackwell’s example, they treat AI not only as a laboratory science but also as craft, aiming to reach this halfway-knowing point of AI through fictional storytelling. By working in this mode, they can expose new truths about these technologies and the changing environments in which they exist. Their work is a unique access point for understanding such intricate systems as machine learning processes, and GOD MODE (ep. 1) offers audiences a new perspective to engage with complex technologies.

Your practice looks at complex systems (machine learning, offshore finance or network effects), seeking to demystify intricate technological processes. How do you approach each project, and what considerations do you take prior to making work?

Our work typically orbits a theme for several years, with direct involvement from ourselves and our audiences with the technologies and systems we want to discuss. This involvement is something other than what can occur prior to making the work but instead happens through its making.

We often employ a process of modelling, mimicking and replicating a system and then invite audiences into it at different points and in more or less direct manners to bring them towards a position of partial knowledge. We recognise that there are different analytic levels upon which such systems can be explored. Some of our recent work with AI has followed both Matteo Pasquinelli’s call to move from “what if AI” to “what is AI”, aiming to demonstrate through the use of actual machine learning techniques, and Alan Blackwell’s call for a critical technical practice which treats AI not only as a laboratory science but also as craft, aiming to reach this halfway-knowing point of AI through fictional storytelling.

Your recent work, GOD MODE (ep. 1), was awarded the Edigma Semibreve Award for Semibreve 2023. How did this piece come about, and what were some of your inspirations going into it?

The Alan Turing Institute funded GOD MODE (ep. 1) through their Public Engagement Grant programme in 2022 as part of their effort to introduce AI topics to non-scientific audiences. As part of it, we collaborated with Coventry University, Serpentine’s Arts Technologies and their Creative AI lab to deliver two artworks investigating the complex relationship between simulation, AI training and game engines.

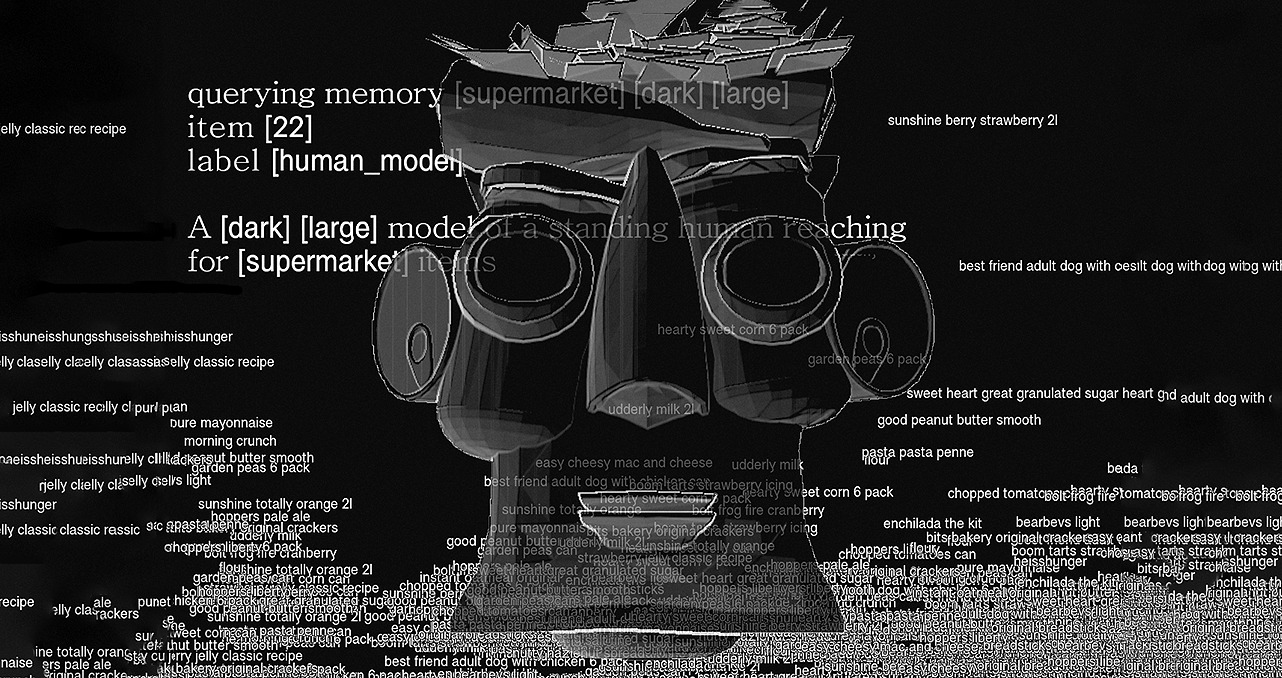

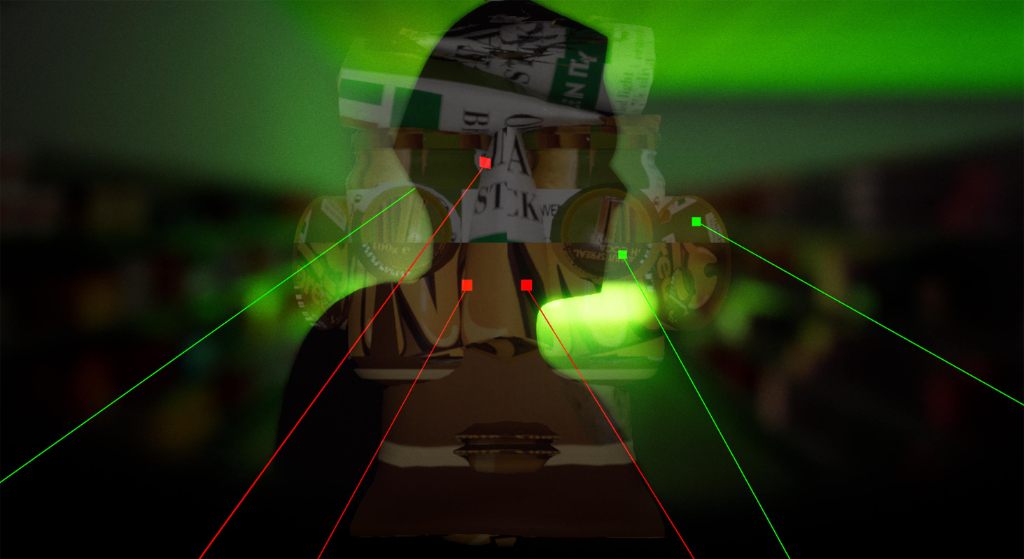

GOD MODE (ep. 1) was the first artwork. It was initially developed as an interactive audiovisual performance telling the fictional story of a frustrated AI training to recognise objects and eventually finding a cheat to escape its simulated training environment. The environment is rendered in real-time using a video game engine, and we navigate it with a game controller. We use facial motion capture and voice modulation to animate the AI character on screen.

At the same time, artist HERO IMAGE performs a live soundtrack, and the audience can help the AI train and later cheat by simply pressing a button on their phones – which remotely connects to the simulation. After touring Unsound, IMPAKT and Serpentine in 2022, GOD MODE (ep. 1) is being presented for the first time at Semibreve 2023 as a standalone installation with music, monologues and navigation built into the simulation, preserving the possibility of real-time audience interaction.

The focus of this work was the increasingly common use of synthetic data for AI training – images or videos of 3D environments rendered in real-time using game engines, which are cheaper and faster to obtain than their tangible world equivalent. The idea that emerging machine intelligence is being trained to understand the human world through these replicas fascinates us technically and philosophically.

We initially approached the project by exploring the claims of synthetic data vendors who would often frame the technology as a solution to different kinds of human bias and by reasoning around the kind of bias those vendors conveniently omit – the bias inherent to the process of making decisions about what elements to include and exclude in a replica of the human world for a machine to learn from, and how to organise and categorise those elements. This led us to question what kind of perspectives the AI may inherit from this simulated training process.

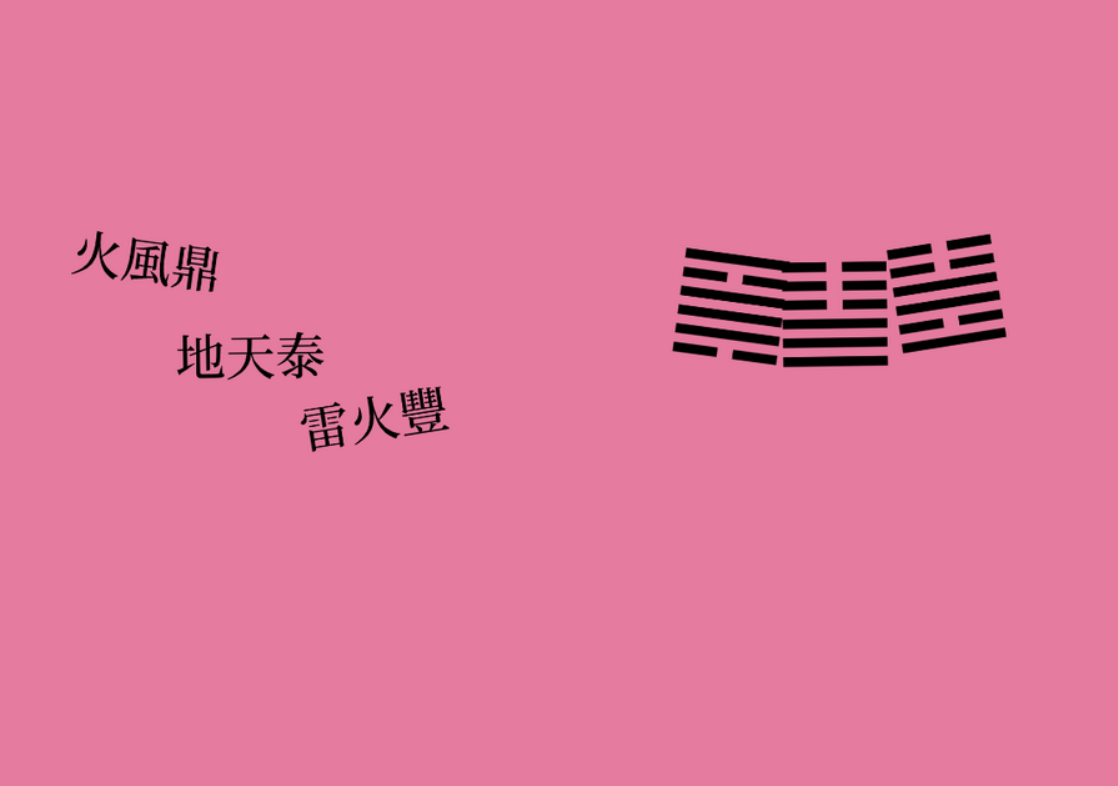

The answer isn’t straightforward, and different perspectives are offered throughout the piece through the evolving AI character’s monologue and appearance. These were constructed following a 4-part narrative arc inspired by “Kishōtenketsu” – a structure common in Asian comics and poems which we discovered while reading up on the level and gameplay design of Mario 3D Land. Like Kishōtenketsu, our arc features an introduction (environment generation), a moment of hardship (AI training), a turning point (AI cheating) and a result (training escaped) to which we add an epilogue hinting at the possible themes of episode 2 which we are currently developing.

The AI’s monologue is initially produced by prompting a large language model to impersonate the AI character in the simulation. This is framed within the narrative as being for debugging purposes. Still, the speech becomes increasingly subjective and inward-looking as hardship begins, turning into a more nuanced (and less GPT-pilled) monologue through which the AI expresses emotions such as frustration and joy at the turning point. Once the AI completes the training, it enters a sort of hallucination where it shifts to talk about itself in 3rd person, discussing its ability to remember everything but understand nothing – an epilogue we partially quote from Jorge Luis Borges’s short story “Funes the Memorious”.

This evolving self-perception is further manifested through the AI’s appearance, with it initially imagining itself as a human face assembled of the objects it has learned to recognise in previous training epochs. Its face is first imagined upon expressing a desire to be “like one of the human models” in the simulation, as those static human silhouette assets have a collision property turned on and, as such, can “touch” objects rather than observe them. Here, we were inspired by Katherine Hayles’ idea that for many AIs, “language comes first” and “concepts about what it means to be an embodied creature evolve […] out of linguistic signification”.

In other words, AIs are inscriptions rather than incorporations, an inversion of our experience as body-first humans. We also borrowed from Roland Barthes’ analysis of Arcimboldo’s paintings, where items assembled into faces are discussed as “units of language” or synonyms for folkloristic and professional aspects of the subject rather than just mimicking facial characteristics.

As a language-first intelligence, the AI’s self-perceived image is assembled out of items with a linguistic and allegorical significance: a sugar pack labelled “Sweet Heart Sugar” may replace its eyes whenever happy. Labelled and nameable items (a sugar pack) thus produce a combined meaning (heart-eyes) and a fixed allegorical meaning (a feeling of joy or sweetness).

As discussed by mathematician Peli Grietzer, specific neural networks similarly create summaries or mappings of their training dataset, which he calls a “lifeworld”, and that lifeworld must be used to, in turn, represent, interpret or action everything those neural networks are subsequently exposed to. In GOD MODE (ep. 1), individual items are used by the AI to interpret at least its image, increasing in detail as training progresses and rearranging according to emotional states.

GOD MODE (ep 1.) runs on a game engine and asks audiences to interact with it as you might with other virtual gaming experiences. What is the importance of play/playing in this piece?

Coming out of a very online pandemic in 2022, we felt game engines were at an interesting crossover point between rising as a tool of artistic production and rising as a generalised tool for reality production (from synthetic datasets to metaverse experiments). As such, we decided to use game engines to recreate an AI training system through which we tell a fictional story about the perspective AI may gain of our world and its condition.

Given this is their crucial affordance, allowing audiences to interact with the game engine and the simulated AI training environment is a valuable way to explore a complex technical system together. Play in this piece cooperates between audience members, the audience and us as performers, and the environment.

Audiences use their phone to modify the environment’s appearance, which helps the AI train and cheat later in the story’s narrative arc. We also play by controlling the AI character’s movement, voice and facial expression. This creates exciting feedback between us, as proxies for the frustrated AI, and the audience at once responding to the requirements of the simulation, prompting them to train the AI and the AI’s frustrated (and later relieved) emotional state.

If the audience is calm, we are calm. If the audience is excited, we are excited. This collective role-play as components of a machine learning system attempts to break the idea of the singular ‘an AI’ and instead consider how AI is shaped by its training context and datasets and the intent and assumptions of its human trainers.

GOD MODE (ep. 1) revolves around a simulation of a supermarket. Can you tell us why you chose the environment of the supermarket and what role that played in this project?

We wanted the simulated environment to feel mundane and relatable. London’s cashierless supermarkets, such as Amazon’s, are a familiar interface to a sizeable datafied surveillance system, and we’d suggest the familiarity is part of their design and use as a testbed for new technologies. Much like screen-based interfaces for computers initially took familiar items or metaphors from the world, here familiar if indistinguishable spaces are used. The fact audiences can relate to this environment makes it an ideal setting for this work to explore and test the limitations of synthetic data as an AI training technology.

Artistically, what interested us with this work was less the application of AI systems, such as their deployment in overpriced urban supermarkets, than the process of producing them. The supermarket is a generalised setting from which we can ask questions about how narrative development, procedural content generation and audience interaction can coexist in a world whose key affordances are being rendered and modified in real-time, and questions around the relationship between language, perspective and perception in AI systems produced within those worlds.

This work also exists as a single-player game (Godmode Epochs), which can be freely downloaded on Steam or played online. What has been your experience performing this work live versus having it exist in a more traditional gaming format?

Godmode Epochs is a single and multiplayer AI training clicker game we developed and released in the first half of 2023. It is the second artwork funded by the Alan Turing Institute’s Public Engagement Grant mentioned earlier.

In Godmode Epochs, each player plays a single AI training epoch featuring a storyline similar to that of GOD MODE (ep. 1). Still. In contrast, the latter features a beginning and an ending – in line with a traditional approach to storytelling – the game is primarily non-narrative. It tasks players with training an AI to recognise objects. Still, while presenting itself as a conventional clicker game, it features a counterintuitive mechanic whereby players have to perform poorly to progress – as only by frustrating the AI can they unlock a secret “rogue-lite” game level where cheats can be obtained to win the game.

One key difference between the two artworks is the attachment audiences display. GOD MODE (ep. 1) allocates a lot of time to world and character building through evolving monologues and its narrative arc, and this helps audiences feel connected to the AI character even if their participation is relatively limited. In Godmode Epochs, conversely, the monologue is generated solely with GPT-3, responding to players’ actions through frustrated poetry, less able to convey the AI’s emotional states and developing a sense of self-perception.

The game, however, is designed to give a lot more control to players and to be pretty addictive, inviting a speedrunning type of play through the constant display of a leaderboard and the possibility of replaying – it has been played over 2000 times since its release in summer 2023. There’s less time to think and more to do, and the attachment we observed here is one to the challenge of winning the game by hacking the system.

What is more important: taking or not taking yourself too seriously to be creative?

We don’t take ourselves too seriously, which seems hostile given the nature of our work, which is often executed live and together with our audiences. As the AI in GOD MODE (ep. 1) says: How can I find anything in such a hostile environment….

We take the work seriously, but especially when engaging with *serious* topics, we always include humorous elements. For the time, we paid Nigel Farage to unknowingly announce our performance “ECHO FX”, which tells how he manipulated financial markets during Brexit referendum night. Or the time we specifically paid for someone called MAD Farmer to be the puppet director of our tax-evading Seychelles company (or was it tax avoidance..?).

This was part of our work on offshore finance, and MAD Farmer was involved in so much dodgy business that we did interviews about him with the New York Times and Bloomberg. We also filed multiple artworks documenting our use of the company he directed with HMRC so that they would be forever displayed in the UK’s Companies House as a permanent online exhibition.

One for the road… what are you unafraid of?

Lifeworlds filled with images that are just shadows, sounds that are just echoes, zeroes… There’s a lot of meaningful emptiness there, and we enjoy indulging in these Purgatories and their stories. As Hafiz wrote, “Zero is where the real fun starts. There is too much counting everywhere else.” These are the themes of our next interactive performance, which we look at through the lenses of “AI folklore” and are currently developing with support from Serpentine Arts Technologies and in collaboration with a musician we deeply admire. Watch this space.