Text by Olya Karlovich

New York-based artist Ian Cheng has been creating live simulations since 2012. He explores the human mind and “an agent’s ability to deal with an ever-changing environment” by combining AI, video game technologies, and cognitive science. His recent work, Life after BOB: The Chalice Study, reflects on the possible scenarios of our future in an age of rapid technological innovation. What will it look like when we coexist with intelligent machines?

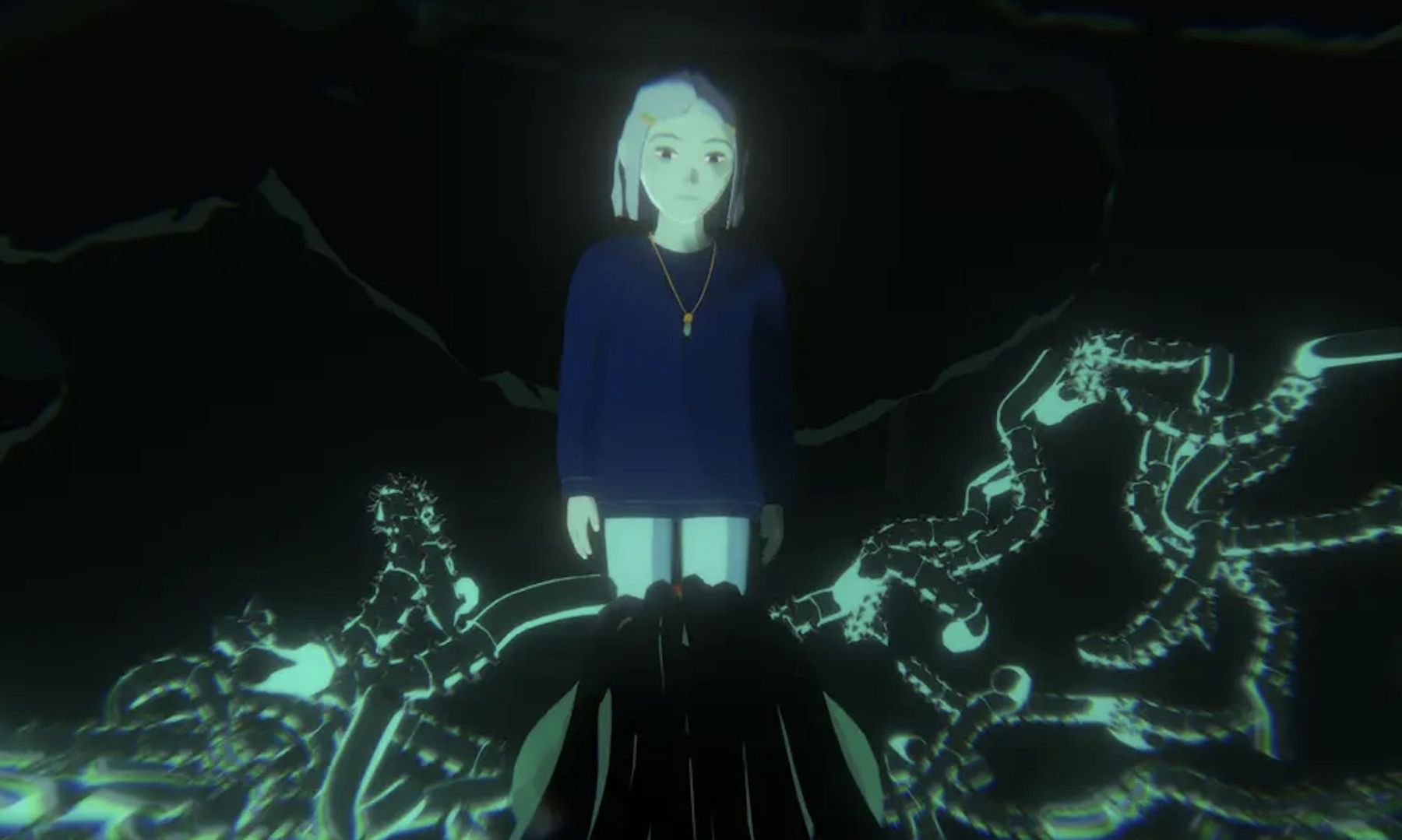

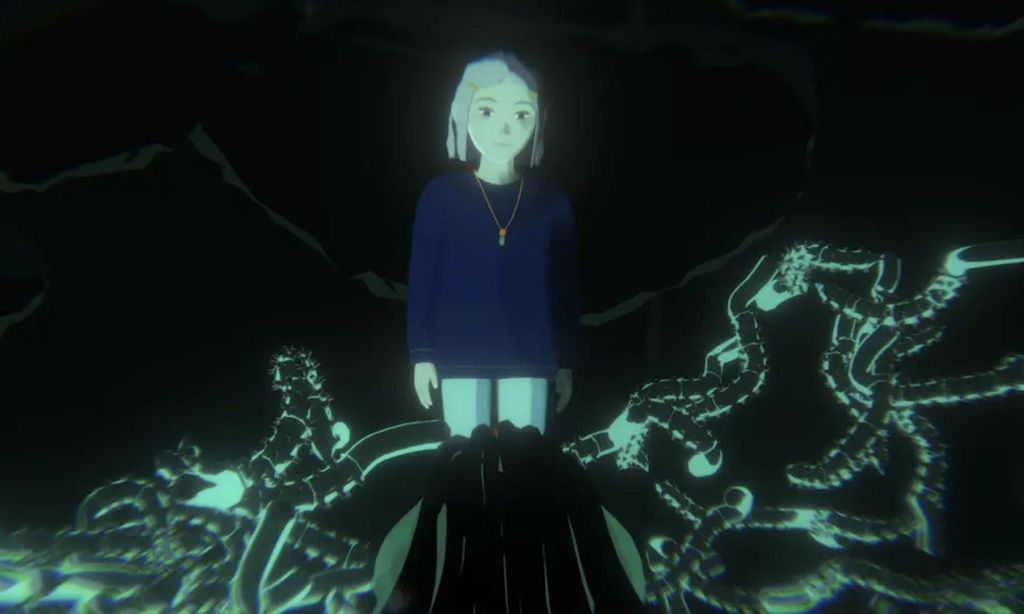

The project is currently touring worldwide, co-commissioned by LAS Berlin (Germany, Berlin), The Shed (USA, New York), and Luma Arles (France, Arles). In autumn, LAS will present it at Halle am Berghain in Berlin. The centre of the exhibition is a narrative animation about a ten-year-old girl named Chalice.

When she was a little child, her father, Dr Wong, implanted an AI assistant, BOB, into her nervous system. BOB was supposed to help Chalice live a meaningful and happy life, coping with the chaos of the world around her, but everything went amiss. While the AI coach was successfully doing its job, the girl grew more irrelevant. As Dr Wong began to favour the BOB side of Chalice, she started wondering: What is left for her to do as a classic human?

Combining compelling storytelling and simulation is the primary formal challenge of Life After BOB and its future episodes (Life After BOB is a planned eight-part anime miniseries). Speaking about the difficulties he encountered while bridging these techniques, Ian Cheng explains: Simulations are like kids, pets or a park. You set up the initial conditions, help steer its character a bit in its infancy, and then let it go and surprise you. On the other hand, storytelling is very precise and deterministic. When you experience a story, you want to trust that its drama is satisfying and meaningful.

While working on the project, the artist also had to take into account that the film and simulations have different temporality. He notes that combining them in one work is like playing with flickering. A viewer lives in the cinema very intensely, captured by plot twists. But when it comes to simulation, people take it relaxed, their eyes wandering over various details and slow-evolving changes.

Life After BOB was entirely created on the Unity video game engine, so the animation is generated afresh for every viewing. No one has made such a long film using this tech solution before. Conversely, Cheng dreamed of a production environment where rapid iteration was encouraged and updates were the norm.

I wanted to find a way to turn movie-making into software building. I am betting this will be the norm in the metaverse transmedia future.

But the innovative ideas go far beyond the film itself. After watching the animation, exhibition visitors will have the opportunity to dive deeper into the Live after BOB universe. Using their smartphones, they will interact with the World by watching the screen – scroll, zoom in or slow down scenes, focusing on individual objects. Ian Cheng calls it a portal into the world that one can inhabit and roleplay in. The idea to create such a “programmable narrative medium” came to him while reading books to his two-year-old daughter Eden.

When I read her, for example, The Big Red Barn, she already knows the story of the animals going out to play and then going to bed at the end of the day. She stops me on a page and points at a detail. She says, “Why is donkey braying? What is a donkey eating for breakfast? Did he eat chicken eggs? Make chicken talk to me.” The story is perfunctory. It is simply a vehicle to get to certain pages that spark her imagination and compel her to improvise about the characters and their inter-relationships.

With Life After BOB, the artist wanted to recreate that childlike wonder and joy of immersing yourself in the story world by making a seamless transition between the main narrative and the imaginary actions of the characters. From time to time, Ian Cheng makes changes to his project. For example, in the opening scenes of Live after BOB version 1, the world background was represented by title cards. But after the premiere, it became clear that they were not perceived by the viewer. Names and concepts were disconnected from their visceral look and feel. So I scrapped the polite title cards and now have a fully animated introduction which I’m excited about, the artist adds.

But the most interesting thing is that literally, anyone can influence the world of Life after Bob. Inspired by fan fiction and fandoms such as Wookieepedia’s Star Wars, Ian Cheng also launched an AI wiki ( that collects information about animation characters and artefacts. By editing this database, viewers can modify and expand the details of the world. The artist hopes that as more people see Life After BOB, they might experiment with thinking and writing through the lens of this worldview in the wiki.

At first, the main wiki contributors were Ian himself and his producer Veronica So. They attempted to imagine what belonged and did not belong to the fictional universe’s broader environmental, social, and technological realms, those developing its aesthetics, atmosphere and worldview. Life after BOB conveys the idea that technology produces surprising chaos that can’t be unseen. And in this stream of constant changes, as Ian Cheng notes, humans have to get over their sacred values and try to get to know themselves deeper. They try to adapt, and whatever comes of that adaptation will be true, or, at least, interesting.

The exhibition at LAS will run from 9 Sept – 6 Nov 2022. Find tickets here.