Text by CLOT Magazine

Computing technology is nowadays present in all aspects of music. For some, like Professor Eduardo R. Miranda, understanding the relationship between musical creativity and computing technologies is pivotal for the future of the music industry. His interest has driven him to create the Interdisciplinary Centre for Computer Music Research (ICCMR), where he has developed ground-breaking research programs with talented musicians and researchers.

They aim “to gain a better understanding of the impact of technology on creativity” while, at the same time, “looking into ways in which music mediated by technology may contribute to human development and well-being, in particular on health and disability”.

ICCMR began to take shape in 2006 when Prof Miranda, after working ten years for Sony in Paris carrying out speech recognition research in Artificial Intelligence (AI), decided his professional and creative needs were not fulfilled and started looking for where he could make them real. Professor Miranda told us how he built up a new department from scratch and started developing study programmes at the forefront of the most innovative research in AI and music interfaces fields.

He found support for his ideas at the University of Plymouth, where he set up a computing lab. The University of Plymouth has a reputation for its innovative research programmes. Their digital Art research and practice have been strongly influenced by Roy Ascott, the seminal figure in the history of digital and telematic art.

When establishing ICCMR, Miranda brought over some of his research at Sony, although focusing on music composition instead of language evolution. He had this novel idea of using AI to help creativity, inventing systems to help understand and evolve creativity. Working in the field of AI, he naturally developed an interest in studying the brain.

Being at Plymouth University allowed him to meet neuroscientists and, with their help start modelling brain functions and developing new fields of research on brain-computer music interfaces. His main idea was to identify patterns of electroencephalograms (or brain waves) that people could use to control musical instruments. This would allow disabled patients to make or be able to play music. This line of research has developed into projects like Activating Memory’, a composition for eight performers, a string quartet and a Brain-Computer Music Interface (BCMI) quartet.

The BCMI quartet consists of four persons wearing a brain cap with electrodes capable of reading information from their brains. Remarkably, a concert was held at the Royal Hospital for Neuro-disability in London (2015), where four severely motor-impaired patients teamed up with a string quartet to form the Paramusical Ensemble. This composition gave the motor-impaired patients and the professional ensemble an opportunity to make music together.

Another prominent line of research at ICCMR is known as Biocomputing. The department had an interest in theorising the future of computing. In the field of music, there has been a lot of effort into interfaces or controllers that would allow physical interaction with computers. Miranda’s interest, however, turned to what it was inside the machine.

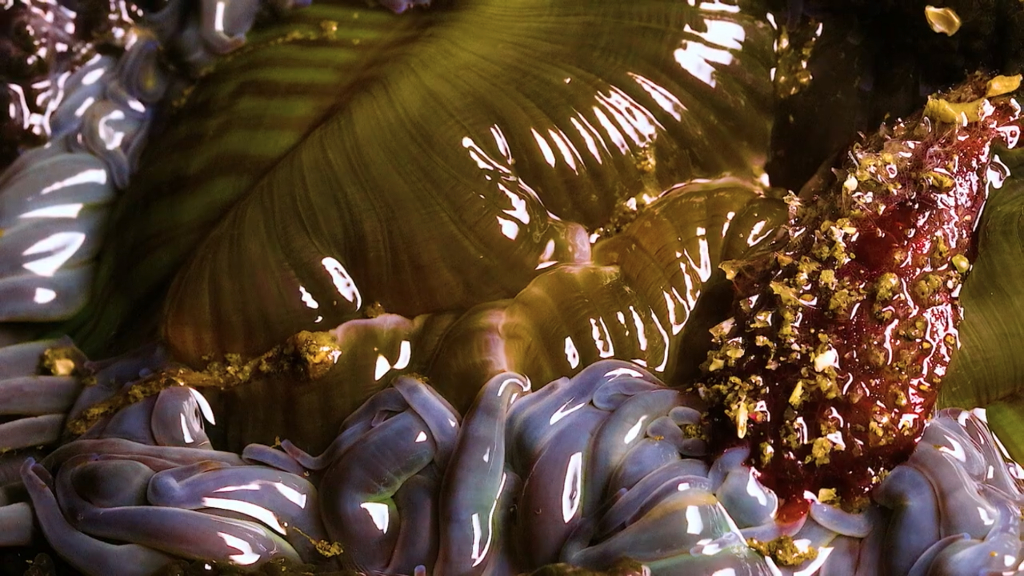

At first, he considered doing research on the capability of using quantum physics, but the accessibility of this type of technology is difficult and expensive. That’s when they turned to study the biocomputing possibilities of the slime mould Physarum polycephalum. The first studies with Physarum polycephalum started in Japan around ten years ago; it’s quite a novel field. It has been described that this organism exhibits intelligent characteristics similar to those seen in single-celled creatures. It is able to solve computational problems and even exhibits some form of memory.

At ICCMR, they began to study the organism’s molecular structure and how its particular functionality could be used for computing purposes and generate creative tools. The most interesting part of the system is that it doesn’t behave in a precise and predictable linear manner: it has a random behaviour with programmability, so they can use it to generate new data. For instance, some musical data is inputted into the biological system, and what is obtained or taken out is a distortion of that data.

The Biocomputer music project gained much attention and interest in the press and was featured in renowned publications like the Guardian and the Wire magazine. Professor Miranda also told us that the next step for the ICCMR research programme would be to work with synthetic biologists to manufacture the important molecular aspects of the Physarum polycephalum, like its voltage, to understand the chemistry needed to create the interactive musical computer of the future.

One last ICCMR’s remarkable research area we would like to mention is data visualisation. In a time where big data is becoming fairly ubiquitous, new ways of making this enormous amount of information more interpretable and comprehensible are vital, and 3D visualisation is a good example. Nuria Bonet is a PhD student researching Musification, or the process that allows the person working with data to interpret it aesthetically. This then provides an aesthetic approach to conveying the information that’s in the data. The artists interpret the data before the piece of work is made, and it posteriorly engages with its scientific interpretation.

We talked over email with Dr Alexis Kirke, who holds a permanent post as a Senior Research Fellow in Computer Music at Plymouth University and has PhDs in the field of Artificial Neural Networks and Computer Music. This is what Dr Kirke told us when we asked him about when and how his interest in artificial neural networks and computer music came about:

I studied at King’s College London for a short time with John Taylor in the maths department, who was a pioneer of artificial neural networks – he was an inspiring speaker, and I became fascinated by them. My first PhD at Plymouth was in the Neurodynamics Research Group, which later became the now-large Cognition Institute. My fascination with neural networks increased as I learned about the – then–a new field of neural modelling and met visiting pioneers in that area, such as Dan Levine.

I later went on to use my knowledge of neural networks on Wall Street, where I worked for a brokerage there, and we developed a neural network model to help maximise trade profits. I sometimes wonder what my life would have been like if I stayed in my job. My colleagues became the head of algorithmic trading departments in large Wall Street brokerages! However, I reached a point in my life where I thought Do I want to be on my deathbed thinking that what I achieved in my life was helping people trade stocks more efficiently? No! So I knew I had to get into writing and music, or I would eventually become engrossed in a high-paid Manhattan lifestyle. Not such a disaster, you might think, but I was bursting with ideas and a desire to create art.

After a period of false starts (singer-songwriter, novelist, short story writer, and dance music producer), I decided to focus on music. Then I heard about a funded PhD opportunity in computer music at Plymouth University. It was a much more financially realistic route than doing a music degree. And of course, without realising it, I found the perfect place to use my combination of experience.‘

Taking ICCMR’s collaborative and interdisciplinary research approach within the University, Plymouth hosts the Peninsula Arts Contemporary Music Festival – an annual festival that showcases the most innovative research programmes. The festival is directed by Simon Ible, Director of Music for Peninsula Arts at Plymouth University and Professor Miranda. It is promoted in partnership with Plymouth University Peninsula Arts and ICCMR.

For this year’s edition, Dr Alexis Kirke presented two performances: A Buddha of Superposition and Come Together: The Sonification of McCartney and Lennon. Kirke explained the intellectual process behind the piece Come Together: The Sonification of McCartney and Lennon and shared his personal connection to the Beatles;

I’ve been a huge Beatles fan since my dad introduced me to Sgt Peppers in my early teens. Having developed the lyrical analysis method with other artists and realising that this year was the 60th anniversary of McCartney and Lennon forming the Quarrymen, it seemed a fantastic opportunity to combine my childhood musical enthusiasms with my adult research and composition. The lyrical emotion patterns that I discovered were very exciting and cried out to be turned into a performance. So I composed a vocal duet representing these two people who have had a significant emotional impact on my life.

The piece, a culmination of 7 years of research Dr Alexis Kirke conducted into the emotional analysis of lyrics, analyses the emotions in the lyrics of both Lennon and McCartney through computer algorithms. These emotions are then translated into a classical vocal duet. ‘Using a scientific database of emotionally-annotated words, I plotted the emotional positivity and physical intensity of the lyrics of 156 songs by McCartney and 131 songs by Lennon. This word-based emotion was then mapped into musical features and transformed into a classical duet to show how each musician’s happiness developed throughout their friendship.

Including references to iconic lyrics of some of their greatest hits, the piece mirrors the real-life events that took place during Lennon and McCartney’s friendship. Opening with the onset of Beatles-mania, it hints at popular songs, including ‘I Feel Fine’, to highlight the initial joy of their early success. Posteriorly followed by the plummeting positivity of Lennon during the band’s split in 1970 and the inclusion of ‘Borrowed Time’ lyrics to signify the lead-up to his assassination in 1980. In a capella performance, the duet is composed for a soprano and tenor voice, with each expressing the emotion of one of the songwriters. McCartney’s lyrical happiness will be sonified by the tenor line, and Lennon’s will be encapsulated in the lower pitch of the tenor’, he said over the email.

Since its inception, the festival has been at the forefront of how musicians, scientists and linguists explore new means, forms and usages of voice in communication and musical creativity. Starting as an informal event around six years ago, the festival gained interest from the press, and the University decided to make it more “official”, giving extra support. Miranda says it’s been positive in several ways, such as being under press scrutiny pushing their research projects.

Under the theme VOICE 2.0, this year’s edition of the festival explored the reinvention of the voice and as well presented new approaches to composition and performance. It opened on a Friday, 24th February, with the talk The Art of Inventing Languages by David J. Peterson, creator of the Dothraki language for HBO’s fantasy series Games of Thrones and a language for the Walt Disney film Thor: The Dark World. On Saturday the 25th, The House hosted the Festival Gala Concert under the baton of Simon Ible.

The gala included the world premiere of Vōv by Eduardo R. Miranda and David Peterson, which was performed by Peninsula Arts Sinfonietta; in it, virtual performers sang a poem on the evolution of love and Butterscotch Concerto by Eduardo R. Miranda and Butterscotch. As a beatboxer, Butterscotch uses her voice in a unique way, almost like a percussion instrument.

These were examples of how new classical music can be composed in a contemporary context. The festival closed on the 26 February with three highlights: The Voice of the Sea by Nuria Bonet in collaboration with the Marine Institute and the Plymouth Coastal Observatory; Come Together: The Sonification of Lennon and McCartney by Alexis Kirke and Silicon Voices by Marcelo Gimenes.

We headed to Plymouth on a rainy and windy Sunday to attend the last day of the Peninsula Arts Contemporary Music Festival. The first performance we enjoyed was Núria Bonet’s The Voice of the Sea, which ‘(…) uses data from buoys located in Looe Bay, which is just along the coast from Plymouth, in South East Cornwall. The buoy reports its state every 0.78125 seconds and sends a numeric value, so it effectively tells you the height of the waves in real time. It also reports on the wave direction and period and the sea temperature.

These data will be mapped to musical parameters: frequency, tempo, filter coefficients, randomness, spatialisation or whichever other parameter needs determining. The piece will begin with a very literal translation of the data into sound before demonstrating the more complex creative possibilities of the system’.

For Bonet, who, as we mentioned before, is a current PhD student at the ICCMR, it is not easy to choose which is the most innovative line of research in the department. It’s hard for me to speak about all the research at ICCMR. Still, it is exciting that there are many innovative lines of research (brain-user interfaces, unconventional computing, movement tracking, etc.).

And some of this work is ground-breaking; for example, in Alexis Kirke’s work with quantum computing to create music, it is difficult to comprehend exactly where it’s heading. What I can say is that I think we’re heading towards more music. Hence me coming in as a composer to deal with the concepts being developed here in a musical way.’, she says.

After Dr Miranda gave us a tour through the department, we saw Marcelo Gimenes’ piece Silicon Voices. Gimenes holds a PhD in computer music from the University of Plymouth and is currently an Associate for Computer Generated Music Systems.

His projects aim to generate unique intelligent music composition tools, like the iMe (Interactive Musical Environments) computer system, a multi-agent platform designed to support experiments with musical creativity and evolution in artificial societies. Silicon Voices is a piece for contralto and bass human voices and live electronics, drawn from Gimenes’ research into this field of music and AI. I

t showcases software that simulates communication using musical phrases to evolve into a repertoire of generative music. His piece was the best way to finish a day full of discoveries and listening to exciting new projects. It also felt very refreshing to hear exciting things keep happening outside of London.

Innovative models of computation may provide new directions for future developments in music composition. Professor Eduardo Miranda and the team of creatives in his department will certainly contribute with the latest research in this emerging but thrilling interdisciplinary field. New modes of interaction, communication and musical languages between humans and non-human biological systems point out as the next thing to watch.