Text by Agata Kik

To be more than a human means to be more than his brain, to be more than the cognitive self. It means to be her instinct, to be like an AI machine, programmed by the body’s memories, free from desire, control or will. Artificial Intelligence (AI) is intelligence that man-made machines exhibit, mimicking the human brain, capable of learning and problem-solving. Although AI is applied to cognitive functions, its automatic behaviour belongs to the body, which contemporary man has lost touch with way too profoundly recently, I think(feel).

Barbican Centre have just closed the doors to its major exhibition AI: More than Human, a trajectory, almost a time travel, tracing the history of the relationship between human and technology, from its extraordinary ancient roots in Japanese Shintoism and Ada Lovelace, Charles Babbage’s early experiments in computing, to AI’s major developmental leaps from the 1940s to the present day. Executed through an interdisciplinary approach, the exhibition combined both artistic and scientific perspectives. With guest curators Suzanne Livingston and Maholo Uchida on board, the project was probably the most ambitious attempt that Barbican International Enterprises (BIE) have decided to host in their space until today. The happening was part of the series of events Life Rewired, Barbican’s 2019 season exploring contemporaneity, which has become fully dependent on technology.

The vast array of artworks in the exhibition established some strong links between each other, pointing to these aspects of the human, which AI has the biggest potential to reveal, as, in a way, we created AI to learn more about the human brain. As a result, most of the artworks in the exhibition involved the viewer’s participation and interaction with the work displayed. The viewer felt recognised by the other, not only by the living being but also by a machine. One of the most explored themes was ways of looking, seeing or knowing, and also the nature and agency of human memory. I have understood that what we see is what we are programmed to see, so the human experience acts as an AI algorithm.

Artist Ben Grosser’s Computers Watching Movies was computationally produced, using software employing computer vision algorithms and artificial intelligence routines so that the system retained, to some extent, its free will. As the system’s moves were guided by the programming, so was the audience, under the delusion that human beings have control over their thinking, invited to draw upon their own visual memory of a scene when they watched it and reflect once more on what they actually feel, when they decide what to watch when they do so. On the other hand, Nexus Studios collaborated with artist Memo Akten. They together presented Learning to See, the work which pointed the audience towards ideas of how the computer interprets their image. Allowing the visitors to manipulate the images, this collaborative work revealed that a computer can see only what it already knows, just like humans.

To get more familiar with the human, artist Mario Klingemann created a work in which the machine performed getting familiar with the human himself. Attracting the audience’s attention and welcoming their judgement, this constantly-evolving piece of live art consisted of a neural network, which the viewer was encouraged to train through the mutual responsive behaviour of an audience member and the machine. In collaboration with Google Arts & Culture, the artist has created a machine which accumulates attention by generating imagery that is interesting to humans.

The system uses artificial intelligence techniques first to acquire data and to then measure the effect of the visual output it generates to train how to maximise the time humans are willing to spend with it. The work consists of three modules, building curatorial blocks that exhibit the machine learning process. These include the acquisition of data, when the machine is learning about the human, then collecting the feedback, and learning the viewer’s preferences. After the audience has interacted with the visual material that the machine has created, finally, during the creation step, it has learnt through the process when the machine generates the most interesting imagery.

Artist and designer Es Devlin, collaborating with Ross Goodwin and Google Arts and Culture a well, created PoemPortraits. This social sculpture invites the visitor to donate a single word, which is then incorporated by an algorithm, which creates a two-line poem, including words from other visitors. Having been trained on 20 million words before the exhibition, at the end of the show, a collective PoemPortrait was generated from every contribution among the audience.

Interaction between the human and the machine, which is aware of his or her presence, was also exhibited through the work by collective teamLab, interactive digital installation What a Loving and Beautiful World, an immersive environment, whose changes are triggered by the shadows of the viewer’s figure. On the other hand, Lauren McCarthy experimented with the tensions between intimacy and privacy, which one is confronted with while interacting with a machine at home. To explore this, the artist thus created a human version of a smart home intelligence system.

Digital art and design collective Universal Everything took over the lobby space of the galleries, having placed digital avatars, which mimicked visitors’ movements around the corridors of the entrance hall. Throughout the duration of the show, the system was learning ergonomic abilities, which began as rather childlike and evolved into a more developed idea of the moving human body with the end of their performance at the Barbican.

Interdependence and mutual influence between the organic and synthetic were best visualised in the work by architect, designer and MIT professor Neri Oxman. The work originated in her MIT research lab, The Mediated Matter Group. The group explores the reciprocity of Nature-inspired design and design-inspired Nature, the synergy of thought and matter, and the science of materials to enhance the relationship between natural and man-made environments. Their research area, called Material Ecology, enhances the mediation between objects and the environment, between humans and objects, and between humans and the environment.

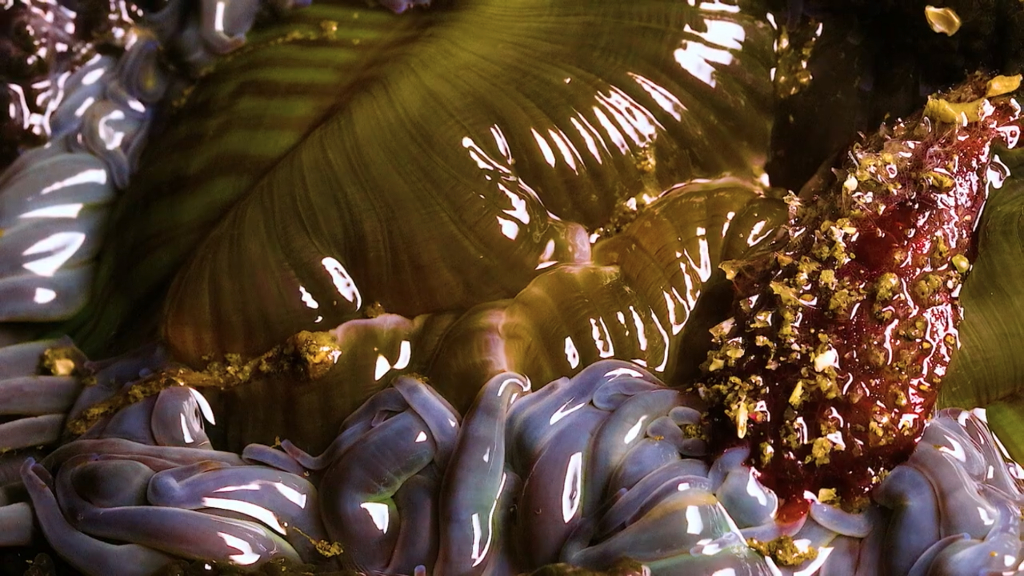

At Barbican Centre, one could view Vespers, a collection of 3D-printed death masks with designs that cultivate new life after death, as they contain microorganisms producing pigments and chemical substances such as vitamins, antibodies or antimicrobial drugs. Inhabited by living microorganisms that have been synthetically engineered by Oxman’s team, the death masks explore the concept of rebirth, given to the dead human by the synthetic that outlives her or him.

Fascinated by the interrelation between the organic, synthetic and also the digital, Massive Attack encoded their album Mezzanine in strands of synthetic DNA in a spraypaint can, pointing to the fact that music and technology also collide, reflecting on the contemporary solutions to data storing.

The presence of the medium of sound in relation to the computer-driven contemporaneity was also marked by artist and electronic musician Kode9, who presented a newly commissioned sound installation on the golem and opened the discourse inside the show with the stories of mythical artificial man-made creatures in the form of an audio essay. Japanese art and technology specialist Daito Manabe from Rhizomatiks and neuroscientist Yukiyasu Kamitani presented Dissonant Imaginary.

Using brain decoding technology facilitated by fMRI, to generate visual imagery of brain activity that changes according to sound, this collaborative research art project investigated the relationship between sound and images. Further, collective Qosmo invited the visitor to make music together with AI, to point to the fact that the machine is there for us to extend the creativity of the human; to expand the human creation, which then can be even more enhanced by the human themselves.

AI is, for me, a programmed body, which takes the form of a synthetic machine, just like a human body is programmed by body memories orchestrated by the unconscious psyche. Giving in to the unconscious powers, letting control go, and joining the vibrational energy flow, in the end, might be the only right way to live and to know. The perceptive power of intuition in the exhibition at the Barbican was, for example, highlighted in Resurrecting The Sublime by Christina Agapakis of Ginkgo Bioworks, Alexandra Daisy Ginsberg and Sissel Tolaas. This collaborative work focuses on the human sense of smell, pointing to embodied knowledge, which is so much more extended than the cognitive.

The artists asked questions about our relationship with nature and our decisions through our thoughts or moves. Humans, such as sentient beings, spend so much time on the screen. Why are we not keen on knowing more about the world by incorporating all the senses we were given at birth? Possibly, it is just an interface that needs to be changed. I hope one day, as a curator, I will also know how the smell can be documented and stored as digital data to be shared with you, for example, now in the ether of the online.

In conclusion, it would be good to speculate about the future. Thankfully, there is Lawrence Lek, who can imagine and visualise more than anyone else. During the AI show at the Barbican, the artist presented an open-world video game, 2065, taking place when, thanks to mechanistic automation, we do not have to work anymore and spend the whole day playing video games. The most important element of this idea is that every player starts the game with the same intention: ‘they all want to be somewhere else’. I believe this is the message when one has nothing to do but play, they want to be somewhere else.

Could I compare the life of the present day to a game? I am not sure, but the desire to escape the current reality of exchanging the being of now and here for abstract dreaming, overthinking or simply overdosing and drinking has been an antidote to coping with the material world for quite some part of present-day humanity. I am totally not against virtual reality. I am quite an advocate of multidimensionality, but I believe in the productivity of belonging to other worlds than material reality only when this is done in simultaneity, as expansion instead of mere replacement.

Have you heard that in the 17th century, computers were human or humans were called computers? During the 20th century, due to wartime havoc on the male gender, human computers were mostly female counting, for example, the number of rockets needed to make a plane airborne. To wrap up, it would be good to come back to the origin of AI, the machine’s first algorithm, and Ada Lovelace, the first one to recognise that the machine could go beyond mere counting. This extraordinary female, who gave birth to the first computer algorithm, is believed to get there thanks to her familiarity with patterns, compositions and repetitions of textile designs, for example.

We now know from Lovelace’s notes that her sensitivity to and analytic approach to understanding music also led her to sense something not yet known, not seen by the eye of the male. I want to point out, at the end of this paper, that the origin of Artificial Intelligence lies in the feminine instinct, the unconscious knowledge that moves the body, and awakens the brain to fire neurons, so that the mind can welcome innovative ideas like this one, like the AI, this lifeless material, this inanimate form that was born only due to the fertile analytical brain.