Interview by Allan Gardner

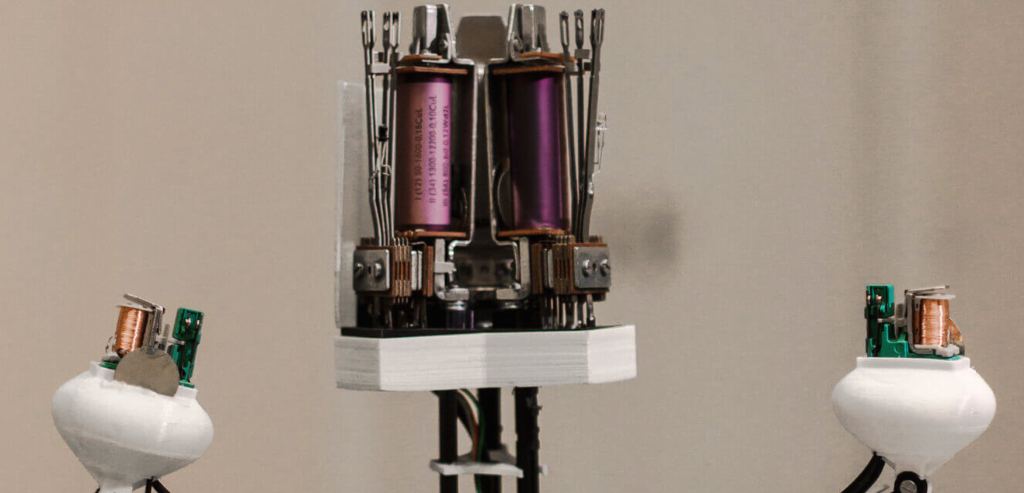

Moritz Simon Geist is a media artist and robotic musician. As a robotic musician, he uses everything from the clicking of failing hard drives and small motors beating on metal through to 3D-printed robo-kalimbas and more. Triggering these via midi, he works as a sort of human composer for a robotic orchestra, constructing and conducting the many moving parts through the production and performance of music.

Moving forward from his 2013 work MR-808 Interactive, a sort of physical extrapolation of the classic Roland drum machine built into a 4x4m installation. In this piece, robots play the individual instruments present on the TR-808 in the style that the drum machine triggered the sounds originally, creating a new dimension for their tonal quality and interactivity between robotics and human users. This expanded dimension of robotic sound generation is something present across much of his body of work.

Now, Geist has published a new record entitled Robotic Electronic Music, played entirely by robots. It works by Geist putting these robots together in ways through which he can record or trigger their movements. An interesting difference between this process and the traditional production of electronic music is the tactile nature of the production of the sound.

These are analogue noises being produced in a physical environment by physical (mechanised) things. As a sort of composer/conductor for these robots, Geist is able to physically manipulate both the robotics (for example, the speed of motors or the stress on a crashing hard drive) as well as the objects they interact with to generate tones.

On Robotic Electronic Music’s ‘G#’, the robots play tuned piano strings, pre-prepared to generate resonant tones. That particular title comes in reference to Terry Riley’s composition ‘In C’ alluding to the lineage of experimental composition present in Geist’s work as well as the physicality of layering in the production of music – particularly in relation to the way loops are used in contemporary music making.

This is one of the interesting things about Geist’s robots, the potential they have for generating organic sound. These robots are not tools, like a player piano or a synth workstation. They’re very much collaborators which require care and attention to function as well as possible. They can also be negotiated by making adjustments. They can play with an object or interact with an environment in such a way that requires reconsideration. Maybe a room with more natural reverb, maybe a tiny metal box. I feel like they are much closer to players in an orchestra, musical collaborators, than instruments themselves.

For those who are not familiar with your work, how and when the interest in fusing robotics and music comes about?

When I was at a young age, my parents gave me an educational box for learning electronics. I learned how to solder and started to take things apart in my household, much to the horror of my parents. At a later age, I wanted to fuse my interest in taking things apart and electronic music. One day, I just had quite a (human) band and didn’t want any band members anymore. At the same time, I needed it! It grew bigger and bigger over the years; I did one big installation (MR-808), which was a huge success in the media arts scene. Then I met Mouse On Mars, and I still I keep on taking apart and blowing stuff and turning it into music, and it works!

Mechanised instruments have been around for a while. What makes these robots different or novel?

When you think of music robots, one normally thinks of either big human-like robots that play the drums or any other classical instrument. Or people think of old mechanic instruments like mechanized organs. Both are not my interest. Through the years, I found out that by replacing electronic sound-making in electronic music with robots and small mechanics and integrating them into a musical piece, I am able to create something I can hear nowhere else.

I am interested in the futuristic approach and not in replicating sounds (like a robot drummer) that have already been done. Electronic music done with robots is more haptic, as I can actually see where the sound is coming from and manipulate the sound source with my hands. I can also work with sounds, which I would normally sample, and make them robots. Through this, I can create a soundscape that is both organic and mechanic, something I am missing in electronic music nowadays.

What was the intellectual process behind the concept of the album?

I started with an installation called Glitch Robots in 2014-15. I was interested in small mechanic sounds and how to make them big through amplification. I referenced back to the Glitch and Cut aesthetics of the 90s, using old hard drives, tongues, relays, motors etc.

When I got to the studio of Mouse on Mars in 2016 to start working on my debut, I realized that the sounds were not strong enough for a whole record. So for two years, I constantly went back and forth between Andis Studio and my workshop, constantly building more robots, recording, and tweaking. Like this, we step by step approached the idea of being „inside the machine“and having all these robotized mechanics, and precise sounds.

What’s the robot/instrument you have a particular soft spot for it, and why?

Haha – all of them! But I have a futuristic Kalimba, which is especially good sounding and especially hard to tune. I love that, and I hate it at the same time, as it is always detuning! I needed a bass sound, so I tried to come up with anything that makes a good sine wave sound in real life. I realized that tongues, as used in a Kalimba (Thumb Piano), make pretty good sine waves, so I build several prototypes.

You can tune them by changing the length of the metal tongue and the pressure on the tongue. I beat it with a solenoid, which is triggered by midi. This way, I can play it like a normal MIDI- Drum Machine. If I press a button, the Kalimba plays. It’s very precise, with a latency of only 10ms.

Do you have in mind to train the robots (using AI and neural networks)?

Yes, multiple times! It’s a very cool and super interesting topic. A lot of people are working in this field already, like Google Magenta. Right now, I am concentrating on the sound-generating part and performances. But my friends and I have several projects lined up where we want to combine generative composition (Machine Learning Techniques) with robotic sounds. It’s a natural fit.

You said you’ve worked with Mouse On Mars and dug deep into the history of mechanical music and experiments of early electronic music. What has been your most surprising finding?

Together with Mouse on Mars and Tyondai Braxton, I have been involved in a project playing „IN C“ by Terry Riley. It is very striking how the pieces created decades ago shape today’s music, like the idea of overlaid iterative patterns or having patterns which you can put together freely, but they still fit – a little like Ableton Live! Apart from that, I realized again that we repeat ourselves. Tape loops, generative music, bent vinyl records – it has all been there already decades ago!